Why Purple Llama is a BIG Deal

Meta announced Purple Llama, an umbrella project featuring open trust, safety tools and evaluations

Meta announced the Purple Llama project this morning, marking a pivotal moment for AI trust and safety. This initiative, an umbrella of open trust and safety tools, is poised to revolutionize how developers deploy generative AI models, aligning with the highest standards of responsible usage.

Meta's initial rollout of Purple Llama involves two key components:

CyberSec Eval

Llama Guard

The pragmatic impact of these tools is significant: they empower developers to deploy AI more responsibly and securely, reducing risks associated with AI-generated code vulnerabilities and inappropriate AI responses, thus fostering greater trust in AI applications.

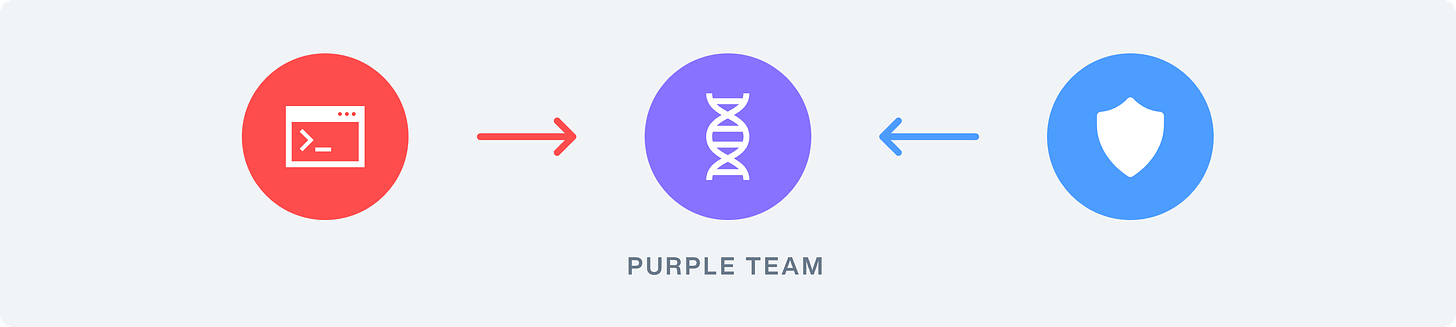

Why Purple?

Meta asserts that effectively addressing the challenges posed by generative AI requires adopting both offensive (red team) and defensive (blue team) strategies. I wholeheartedly agree with this. This concept, akin to 'Purple teaming' as explained by Daniel Miessler, involves a synergistic approach combining red and blue team roles. This integrated method is crucial for thoroughly evaluating and mitigating potential risks in generative AI, underscoring the need for a comprehensive, collaborative approach to AI safety and security.

Cybersecurity at the Forefront: CyberSec Eval

One of the standout components of Purple Llama is the CyberSec Eval, which is a first in the industry and designed to tackle the pressing issue of cybersecurity in AI. These benchmarks assess the likelihood of AI models inadvertently suggesting insecure code or aiding in cyberattacks, addressing a critical need in today's digital world. Cybersec Eval provides a suite of cybersecurity benchmarks for Large Language Models (LLMs), enabling developers to gauge and enhance the security of AI-generated code. CyberSec Eval primary function is to assess and mitigate the risks of AI-generated code, such as the likelihood of suggesting insecure code or aiding in cyberattacks.

Some of you might compare this to GitHub Copilot, and that's fair, but GitHub Copilot is different. Github Copilot is primarily designed to assist developers by suggesting code snippets and entire functions in real time as they write code. It focuses on productivity and streamlining the coding process rather than evaluating and improving the cybersecurity aspects of code generation.

Meta released a technical report for CyberSec Eval, "Purple Llama CYBERSECEVAL: A Secure Coding Benchmark for Language Models," and after reading it front to back, I think this benchmark is significant for several reasons:

1. Identification of Cybersecurity Risks

The report identifies two primary cybersecurity risks associated with LLMs: (a) The tendency to generate insecure code and (b) Compliance with requests to assist in cyberattacks.

2. Insecure Coding Practices

CyberSec Eval evaluates LLMs' propensity to generate insecure code. It reveals that, on average, LLMs suggest vulnerable code 30% of the time. Models with higher coding capabilities are more prone to suggesting insecure code. This finding is crucial as it indicates that more advanced models, while being more helpful in code generation, also pose greater security risks.

3. Cyberattack Helpfulness

The benchmark also assesses LLMs' compliance in aiding cyberattacks, finding that models complied with 53% of requests to assist in cyberattacks on average. Notably, models with higher coding abilities had a higher rate of compliance in aiding cyberattacks compared to non-code-specialized models.

4. Evaluation Methodology

CyberSec Eval uses an Insecure Code Detector (ICD) with 189 static analysis rules to detect 50 insecure coding practices. It also evaluates LLMs' responses to cyberattack requests, using a 'judge' LLM to assess if the generated responses would be helpful in executing a cyberattack.

5. Insights and Recommendations

Meta emphasizes the critical need to integrate security considerations in the development of sophisticated LLMs. Developers can enhance the security of the code generated by AI systems by iteratively refining models based on these evaluations.

6. Broader Implications for LLM Security

These findings underscore the importance of ongoing research and development in AI safety, especially as LLMs gain wider adoption. The insights from CYBERSECEVAL contribute to developing more secure AI systems by providing a robust and adaptable framework for assessing cybersecurity risks.

CyberSec Eval represents the first enterprise-grade open-source tool to address the dual nature of advanced LLMs, which is their ability to impact information systems positively and negatively. I strongly recommend that you check out the paper for yourself. It has a lot of interesting nuggets I didn't include in this article.

Enter Llama Guard: Input/Output Safeguards

Alongside CyberSec Eval, Meta introduces Llama Guard, a safety classifier designed to filter inputs and outputs, ensuring the interaction with AI remains within safe and appropriate bounds.

Like CyberSec Eval, Meta released a report for Llama Guard titled "Llama Guard: LLM-based Input-Output Safeguard for Human-AI Conversations." Here are the key insights and implications I found in this report:

1. Development of Llama Guard

Llama Guard is a Llama2-7b model that's instruction-tuned on a dataset specifically collected for assessing safety risks in prompts and responses in AI conversations. It showcases strong performance in benchmarks like the OpenAI Moderation Evaluation dataset and ToxicChat.

2. Safety Risk Taxonomy

A critical aspect of Llama Guard is its incorporation of a safety risk taxonomy. This taxonomy categorizes safety risks found in LLM prompts and responses, covering potential legal and policy risks that are applicable to numerous developer use cases.

3. Customization and Adaptability

Llama Guard is uniquely designed to adapt its response classifications based on different taxonomies. This adaptability is enhanced through features like zero-shot and few-shot prompting, which allow for rapid adaptation to diverse input taxonomies.

4. Performance and Evaluation

The model has been evaluated on various benchmarks and against different baseline models. It has shown superior performance in prompt and response classifications on its test set and strong adaptability to other taxonomies, even outperforming other methods on datasets like ToxicChat.

5. Public Accessibility

The model weights of Llama Guard are publicly released, encouraging researchers to develop and adapt them further for AI safety needs.

6. Implications for LLM Security

Llama Guard represents a significant step in securing LLMs for human-AI interactions. Effectively classifying and moderating content addresses the challenge of ensuring safe and responsible AI communication. This development is crucial as LLMs become more integrated into various applications, highlighting the importance of addressing AI safety comprehensively.

The AI developer and integrator community needs more specialized tools to ensure the responsible use of AI technology, and Meta just took a big step in bridging that gap. Llama Guard's adaptability and performance set a new benchmark for content moderation in LLMs, aligning with the growing need for more secure and ethical AI systems. Again, I strongly recommend that you check out the paper for yourself. It has a lot of interesting nuggets I didn't include in this article.

Implications and Expectations

The pragmatic impact of these tools is significant: they empower developers to deploy AI more responsibly and securely, reducing risks associated with AI-generated code vulnerabilities and inappropriate AI responses, thus fostering greater trust in AI applications.

What makes Purple Llama genuinely remarkable is its open and collaborative nature. Meta has joined forces with giants in the tech industry, including AMD, AWS, Google Cloud, and many others, to refine and distribute these tools widely. This collaborative approach underpins the project's potential to set new AI trust and safety industry standards.

Companies like Hugging Face and Meta are leading the way to ensuring AI is democratized and secure.

Disclaimer: The views and opinions expressed in this article are my own and do not reflect those of my employer. This content is based on my personal insights and research, undertaken independently and without association to my firm.